In an ideal world…

In an ideal world we would go in search of a piece of data by using our favorite search engine and we would land on a page with a big download button. It would give you a few options for formats. You pick the right one and off you go.

Unfortunately, we all know from bitter experience that this is not yet routinely how the real world operates. We’re getting there, there’s more data out there than ever before but we still have a very very long way to go.

In the real world we hopefully find the data we’re after. Maybe it’s published on a government website somewhere. There’s no big download button, just a big html table.

If we just need to grab a snapshot copy of the data and it’s just on a single page we can copy and paste it with a bit of luck into a spreadsheet.

What if the data is spread over hundreds of pages or we need to keep it regularly updated? Well, we have to write a scraper.

If you know the basics of a language like PHP, Python or Ruby, writing a scraper isn’t very hard at all.

What is a pain is all the stuff around it. Where do I run it? How do I schedule it to run automatically? What if the website that I’m scraping changes? How do I check that the data is still coming in regularly? What do I do if I need an API so another application can regularly access the scraped data?

All of these things you can solve but why bother if something else can take care of that for you?

Introducing Morph.io

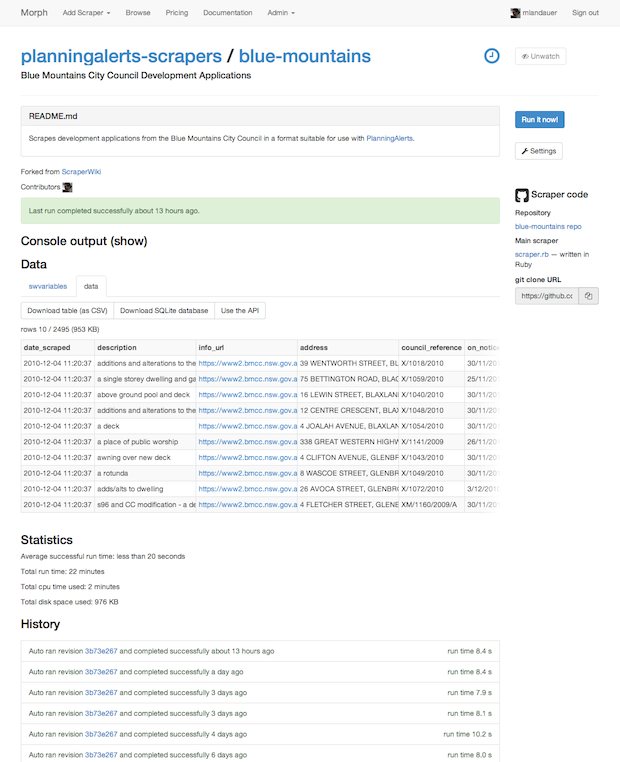

This is where Morph.io comes in. It’s a new free scraping platform made by the not-for-profit OpenAustralia Foundation.

The basic idea is you write your scraper in PHP, Python or Ruby. You can do your scraping in pretty much whatever way you want. All that matters is that the final data is written to an SQLite database in your local directory. This gives you enormous power and flexibility while for the simple case it all stays nice and easy.

All your scraper code is stored in GitHub under version control. People can fork your scraper and contribute fixes and everything on Morph.io integrates tightly with GitHub.

You can run that scraper from the commandline or manually via the web interface. You can also schedule it to run automatically every day.

You can then download the resulting data as CSVs or JSON or even do a custom SQL query against your SQLite database using the API.

You can also watch your scraper (or anyone elses) and get notified via email if the scraper errors.

You can work on the command line with your scraper and when you’re happy push the changes to GitHub and then the next time your scraper runs on Morph.io (either manually or automatically) it will pick up your changes.

Apart from making the straightforward use case of hosting and running a scraper easy, the focus of Morph.io is around collaboration. It’s easy to collaborate with someone else on developing and maintaining a scraper - you use GitHub in the way you know and there’s no mucking around with server deployments or the like.

Migrating from ScraperWiki Classic

If you’re a user of ScraperWiki Classic you probably have already received an email letting you know that the ScraperWiki Classic service is shutting down. Sad news as we’ve been long time users of ScraperWiki ourselves. However, we’ve gotten together with the ScraperWiki folks to make it super easy to migrate your existing ScraperWiki Classic scrapers over to Morph.io. It literally only requires two clicks.

Open Source

Morph.io is also open source licensed under the Affero GPL. So you can use Morph.io without any fear of vendor lock-in.

If your needs outgrow using Morph.io the service, then install your own private instance.

Get started

Hopefully this has given you enough motivation to give Morph.io a try.

Go to Morph.io and write a scraper!

Feedback on how Morph.io is working for you is always appreciated.

We make tools, apps and insights using

open stuff

We make tools, apps and insights using

open stuff

Comments