Having co-organized csv,conf,v2 this past May, a few of us from Open Knowledge International had the awesome opportunity to travel to Berlin and sit in on a range of fascinating talks on the current state-of-the-art on wrangling messy data. Previously, I posted about Comma Chameleon by Stuart Harrison1. Another such talk was given by Sadayuki Furuhashi of Treasure Data2 who presented on tool he is developing called Embulk. Embulk is an open-source tool for moving messy data.

Friction in Data Transport

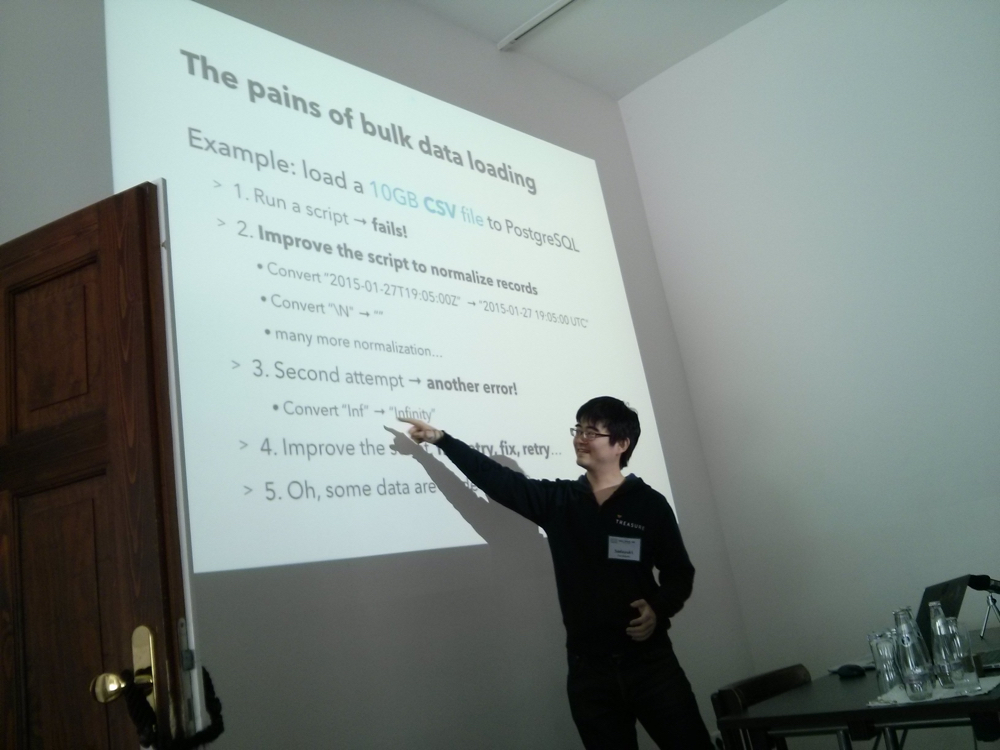

In his talk, Sadayuki talked about the friction commonly experienced in moving large amounts of data from one system to another. He gives a relatively simple example of trying to push a 10GB CSV file to PostgreSQL and encountering a series of issues—broken or missing records, unsupported time formats—that can typically be dealt with only through trial and error. Multiply these kinds of issues across the various and growing number of backends and file formats, and it quickly becomes clear that there’s not enough time in the day for data wranglers to write and optimize their scripts to move data flexibly and efficiently. Enter Embulk.

Embulk

Embulk is open-source tool for transporting massive, messy datasets—in parallel—from one system to another. In this context, “system” can refer to any number of endpoints including Amazon S3, an SQL database, or even a CSV file on your local computer. Embulk attempts to solve the issues above by creating a plugin-based framework that supports various data transport tasks, including file type and format guessing, processing, filtering, and encryption.

Specialized connectors for supporting different storage engines—RDBMSs, cloud services, etc.—as well as various file types —CSV, XML, JSON, HDF5, etc.—can be created by the community as plugins. The core of Embulk is actually quite small and gains most of its power from this plugin architecture.

Frictionless Data

While watching his presentation, it was clear that there is a lot of opportunity for collaboration between the work Embulk is doing and the ecosystem we’re trying to build through our Frictionless Data project. In our project, we’re looking to support easy and efficient transport of data primarily through the development and promotion of the Frictionless Data specifications and the development of various libraries, tools, and integrations. For instance, our Python library for reading and working with JSON Table Schema also supports a plugin-architecture for reading and storing data in a variety of backends. Currently, we have support for Pandas, BigQuery, and SQL (visit our User Stories page to vote for and comment on what you’d like to see next).

Embulk Guess and Data Packages

As an example of the potential overlap, we can demonstrate the

schema and dialect guessing that Embulk employs to load data. To

support loading CSV data into a variety of backends, Embulk needs a

good idea of the types of records (schema) and also the rules by

which these values are separated in the file (dialect). Embulk’s

guess function (embulk guess) makes guesses about the file

structure and outputs something similar to the datapackage.json (see

our specifications for more details). To demonstrate, we can

use Embulk’s convenient example function which creates an example

CSV. It looks like this:

id,account,time,purchase,comment

1,32864,2015-01-27 19:23:49,20150127,embulk

2,14824,2015-01-27 19:01:23,20150127,embulk jruby

3,27559,2015-01-28 02:20:02,20150128,"Embulk ""csv"" parser plugin"

4,11270,2015-01-29 11:54:36,20150129,NULL

The guess function reads the data and generates a configuration file

used by Embulk that looks like the following. Of particular interest

is the parser section.

in:

type: file

path_prefix: /Users/dan/Desktop/demo/csv/sample_

decoders:

- {type: gzip}

parser:

charset: UTF-8

newline: CRLF

type: csv

delimiter: ','

quote: '"'

escape: '"'

null_string: 'NULL'

trim_if_not_quoted: false

skip_header_lines: 1

allow_extra_columns: false

allow_optional_columns: false

columns:

- {name: id, type: long}

- {name: account, type: long}

- {name: time, type: timestamp, format: '%Y-%m-%d %H:%M:%S'}

- {name: purchase, type: timestamp, format: '%Y%m%d'}

- {name: comment, type: string}

out: {type: stdout}As you can see, embulk guess is powerful enough to go a bit further

than other similar type guessing functions by also guessing, for

example, that not only is a column a date, but also the expected date

format structure.

Many of the fields in the parser section can be represented in a

Data Package following the JSON Table Schema and

CSV Dialect Description Format specifications, which are

part of the Data Package specifications. Here’s what the

equivalent datapackage.json file would look like:

{

"name": "sample_01",

"resources": [

{

"name": "sample-01",

"path": "sample_01.csv",

"format": "csv",

"encoding": "UTF-8",

"dialect": {

"lineTerminator": "\r\n",

"delimiter": ",",

"quoteChar": "\"",

"escapeChar": "\"",

"header": true

}

"schema": {

"fields": [

{ "name": "id", "type": "integer" },

{ "name": "account", "type": "integer" },

{ "name": "time", "type": "datetime",

"format": "fmt:%Y-%m-%d %H:%M:%S" },

{ "name": "purchase", "type": "date",

"format": "fmt:%Y%m%d" },

{ "name": "comment", "type": "string" }

]

}

}

]

}The Potential Future

Embulk looks like a really powerful tool that can definitely be part

of the Frictionless Data ecosystem we envision. I would love to an

input plugin to read a datapackage.json to give Embulk’s CSV parser

what it needs. It would also be great to see an output plugin that

can produce a valid datapackage.json file with your data. Once

you’ve generated a schema using Embulk’s powerful guessing

functionality, publishing the schema with your data in a standard

format like the Data Package is an excellent step towards making

your data more findable and reusable. The Frictionless Data

project is, at its heart, about highlighting the benefits of adopting

just such a standardized containerization approach

to data. Of course, even without this, Embulk is a really powerful

tool for solving some of the problems of data transport today. Give

it a try!

- Download Embulk: https://github.com/embulk/embulk

- Follow Treasure Data on Twitter: https://twitter.com/TreasureData

- Follow Sadayuki Furuhashi on Twitter: https://twitter.com/frsyuki/

- Follow Open Knowledge Labs on Twitter: https://twitter.com/okfnlabs

- See the full range of speakers from csv,conf,v2: http://csvconf.com

See Sadayuki’s full talk:

-

Comma Chameleon: /blog/2016/07/18/comma-chameleon.html ↩

-

Treasure Data: https://www.treasuredata.com/ ↩

We make tools, apps and insights using

open stuff

We make tools, apps and insights using

open stuff

Comments